Case study: Teradata to Snowflake migration for a large retailer

Customer situation

The customer is a leading FTSE 100 UK-based retailer operating a large (approx 300 TB, 10.000+ tables, 100.000+ columns, 30.000.000+ new transactions per day) data warehouse on the Teradata platform. Reports and data from it were used primarily by the finance department and many other teams to manage their performance and input into various analytics tools.

The customer is ongoing a transformation onto a strategic platform. Snowflake was selected as the best-fitting, most performant solution. This strategic platform is also coupled with a completely new data modeling approach according to the Data Vault 2.0 standard. But as this is a long-term project, it was necessary to find an interim solution to address issues (low performance, expensive to operate) of the current Teradata DWH as soon as possible.

Selected approach

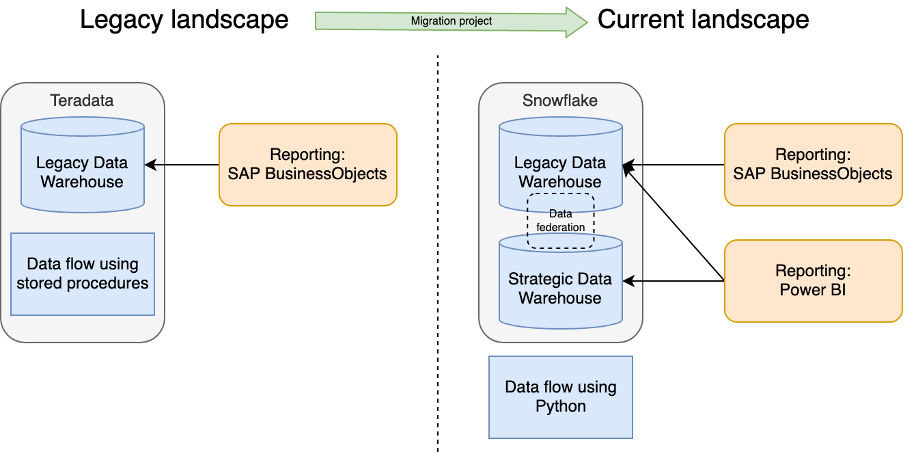

For the interim solution, our UK partner company LEIT DATA, selected migration of existing Teradata DB into Snowflake. We decided to keep the current data model to retain the backward compatibility of reports and integrations and to be as quick and efficient as possible. This enabled us to maintain existing reporting tools (e.g., SAP BusinessObjects) with only a minimum tweak. The strategic project also includes a new reporting solution (PowerBI) successfully integrated with the new Snowflake DB.

The Teradata ingestion pipeline consisted of many stored procedures run by various triggers. This solution was replaced with a more maintainable set of Python scripts ingesting data from S3 batch files already generated for the Teradata solution.

We also found that the current Teradata security model wasn’t manageable and unscalable as it consisted of more than 1 million individual SQL statements (“GRANT”s). We implemented a new security model leveraging the native Snowflake data classification model. This enabled the customer to control access to columns and tables containing sensitive PII data efficiently.

The migration took a team of approx—10 people over 1.5 years. Much effort was spent on extensive testing to ensure that the reports were accurate “to the penny.”

High-level architecture transition

Benefits

Data Warehouse migration to Snowflake enabled the customer to decommission legacy Teradata platform support and maintenance costs and eliminate a dedicated team of 8 Teradata support contractors, replaced by a smaller permanent Data Engineering squad focusing on strategic data value products.

This alone resulted in multi-million £ per year savings. Snowflake made us rethink how the team delivered Data Products and optimized team effectiveness. This led to significant optimization in decreased time-to-market from 3+ months to less than four weeks.

Snowflake Data federation allows easy data sharing of migrated database (in legacy format) with the new strategic data warehouse (in Data Vault format). This accelerated the migration path to the strategic data platform.

It also had these additional benefits:

- Orders of magnitude speed up report generation and data processing.

- An easily manageable, scalable, and auditable security model ensures full GDPR and PII protection compliance.

- Reduced complexity for data visualization, science, and analytics communities within the organization increases productivity.

Lessons learned

Here are key issues we came across during the project and lessons learned from them:

- Large volume data egress from the Teradata platform seems throttled on the hardware level. Export ran extremely slow (300 TB took a month to export), and we investigated every other issue (network stack, landing zone, etc.). We concluded that the Teradata platform itself causes the root cause.

- Teradata platform has strange behavior of decimals rounding. This was further augmented by the lousy design of the original data model (use of float instead of decimal for storing financial data). This led to different results when reconciling and cross-checking reports from Teradata vs. Snowflake. Each such discrepancy had to be investigated fully, resulting in a lengthy testing period.

- Some companies provide services for out-of-support Teradata infrastructure (e.g., replacing failed disks). They may be interested in buying out existing systems after migration.

- As part of any large-scale data migration, it is suggested to review all existing reports to see which ones are not used at all or very sporadically. This can be done by reviewing report access logs or replacing reports with unclear users or usage with a static text to contact the migration team. The goal is to eliminate legacy reports and thus reduce overall testing efforts.

Contact us to get started

Our team participated in critical architecture design and delivery management roles. Contact us for a free assessment session where we will, together with your data leadership team, evaluate the potential for savings and enhance your agility to deliver data-value products.